Picture 1 - Two empty disks

Last updated $Date: 2006-12-14 06:10:31 $

Martti Kuparinen <martti.kuparinen@iki.fi>

http://www.iki.fi/kuparine/comp/ubuntu/raid.html

This document describes how I installed and configured my PC running Ubuntu 6.06.1 LTS. The PC has two identical SATA harddrives configured in RAID-1 to protect me from a single disk failure. Even though I'm using RAID-1 for everything I still make regular backups as RAID is not a replacement of a good backup system.

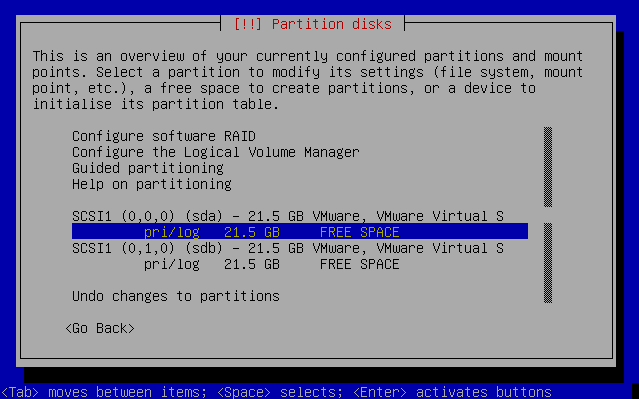

I booted with the "Alternate install CD" and made sure both disks were

found by the installer. Picture 1 shows that two identical disks are found

on my PC. Next I removed all existing partitions which were previously

used by Microsoft Windows. You can easily remove all partitions by pressing

Enter on top of the disk name (I pressed Enter on top of sda and sdb -- one

line above the currently highlighted line).

Picture 1 - Two empty disks

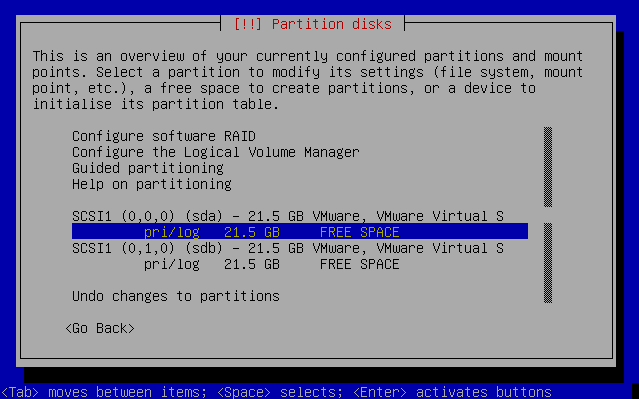

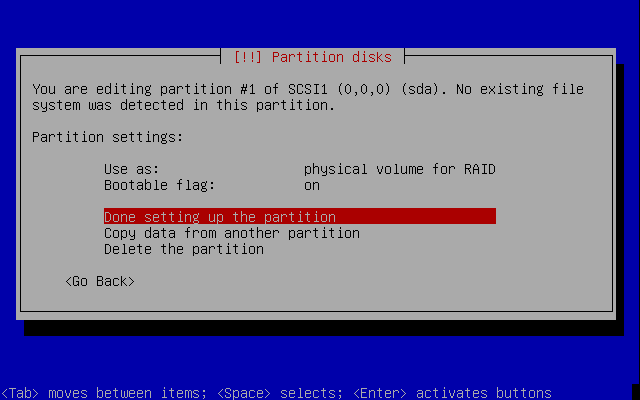

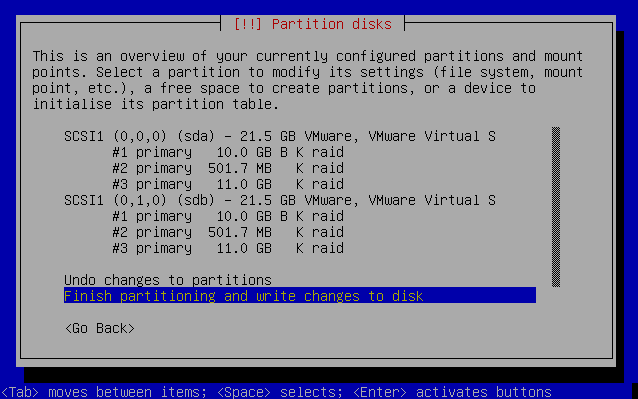

Next I created 3 partitions for both disks: 10 GB for /, 500 MB for swap

and the remaining space for /home. Please note that at this stage the

partition types must be "physical volume for RAID" (0xFD) instead of

"Ext3 journaling file system". Also note how the first partitions of

both disks are marked bootable (the B flag in Picture 3). Without setting

those partitions bootable you might not be able to boot your computer

without a CD!

Picture 2 - Setting partition type and bootable flag

Next I went up and selected "Configure software RAID" and

saved my new settings.

Picture 3 - Two disks with partitions for RAID-1 volumes

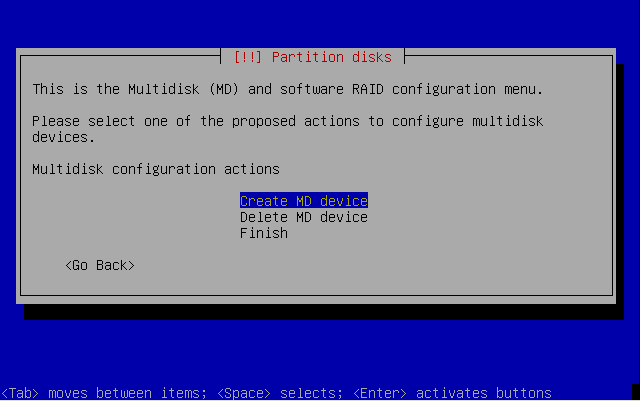

Next I created three (3) MD (multidisk) devices, each configured as RAID-1

with 2 disks and 0 hotspace.

Picture 4 - Creating new MD devices

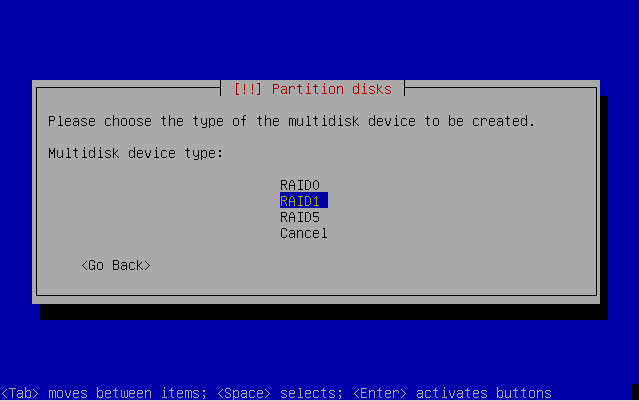

Picture 5 - Creating RAID-1

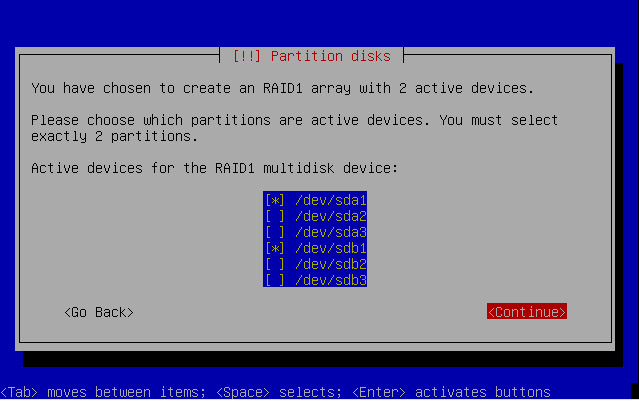

Here in picture 5 I selected the physical disk partitions to be included in

the RAID-1 set. It's important to select two partitions with identical sizes

so in my case md0=sda1+sbd1, md1=sda2+sdb2 and md2=sda3+sdb3.

Picture 6 - Selecting MD components

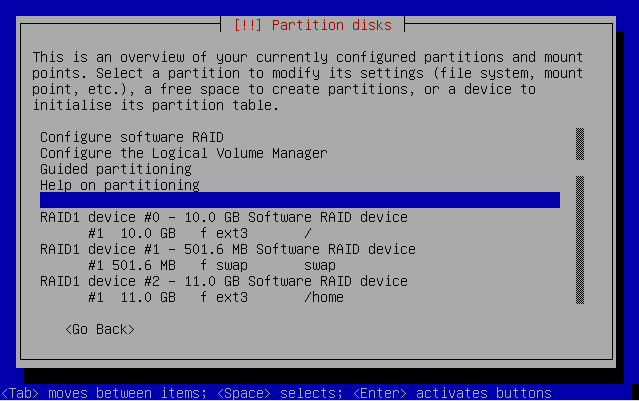

Finally it was time to select the filesystems and mount points for each MD

device. In my case md0 is ext3 mounted on /, md1 is swap and md2 is ext3

mounted on /home. From this point on the Ubuntu installation is a normal

installation.

Picture 7 - Filesystems and mount points

After installation I verified that everything (root filesystem, swap and /home) are really from the RAID-1 disk.

martti@ubuntu:~$ grep /dev/md /etc/fstab /dev/md0 / ext3 defaults,errors=remount-ro 0 1 /dev/md2 /home ext3 defaults 0 2 /dev/md1 none swap sw 0 0 martti@ubuntu:~$ df -h / /home Filesystem Size Used Avail Use% Mounted on /dev/md0 9.2G 2.1G 6.7G 24% / /dev/md2 11G 129M 9.5G 2% /home

It's possible to check the current status of each RAID disk with this command. Note how each mdN contains two sdXN disks and how the each mdN shows 2 of 2 disk components are found and both are marked as U. More details can be seen with the mdadm utility.

martti@ubuntu:~$ cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1]

10707200 blocks [2/1] [UU]

md1 : active raid1 sda2[0] sdb2[1]

489856 blocks [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

9767424 blocks [2/2] [UU]

martti@ubuntu:~$ sudo mdadm --query --detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Fri May 12 00:57:28 2006

Raid Level : raid1

Array Size : 9767424 (9.31 GiB 10.00 GB)

Device Size : 9767424 (9.31 GiB 10.00 GB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Fri May 12 04:38:19 2006

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

UUID : 754cd310:4f102bc3:b590c767:672a9c4e

Events : 0.11700

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

Next I simulated a disk failure by disconnecting /dev/sdb. The system still boots but the status shows /dev/sdb1, /dev/sdb2 and /dev/sdb3 have disappeared from the system and how the /dev/mdN is marked as "degraded" in the status field.

martti@ubuntu:~$ cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0]

10707200 blocks [2/1] [U_]

md1 : active raid1 sda2[0]

489856 blocks [2/1] [U_]

md0 : active raid1 sda1[0]

9767424 blocks [2/1] [U_]

unused devices:

martti@ubuntu:~$ sudo mdadm --query --detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Fri May 12 00:57:28 2006

Raid Level : raid1

Array Size : 9767424 (9.31 GiB 10.00 GB)

Device Size : 9767424 (9.31 GiB 10.00 GB)

Raid Devices : 2

Total Devices : 1

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Fri May 12 04:45:52 2006

State : active, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

UUID : 754cd310:4f102bc3:b590c767:672a9c4e

Events : 0.11812

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 0 0 - removed

Next I reconnected the disk and instructed the system to rebuild itself. After rebuild everything was ok again.

martti@ubuntu:~$ sudo mdadm --add /dev/md0 /dev/sdb1

mdadm: hot added /dev/sdb1

martti@ubuntu:~$ sudo mdadm --add /dev/md1 /dev/sdb2

mdadm: hot added /dev/sdb2

martti@ubuntu:~$ sudo mdadm --add /dev/md2 /dev/sdb3

mdadm: hot added /dev/sdb3

martti@ubuntu:~$ cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sdb3[2] sda3[0]

10707200 blocks [2/1] [U_]

resync=DELAYED

md1 : active raid1 sda2[0] sdb2[1]

489856 blocks [2/2] [UU]

md0 : active raid1 sdb1[2] sda1[0]

9767424 blocks [2/1] [U_]

[>....................] recovery = 2.2% (215168/9767424) finish=16.2min speed=9780K/sec

unused devices:

Finally I reinstalled the boot loader so that the system will boot even if the primary disk (sda) disappears in the future.

martti@ubuntu:~$ sudo grub-install /dev/sda martti@ubuntu:~$ sudo grub grub> device (hd0) /dev/sdb grub> root (hd0,0) grub> setup (hd0) grub> quit

That's it. Now go back to My Ubuntu Installation guide and see how to easily configure your newly installed system.